Category: website2.0

The archetype concept.

Introducing the

archetype:

Caden

Hello, I’m Caden.

I’m an engineer whose research deals with complex sequences of processing and analysing steps, applied to samples and/or data sets. My professional background is mostly informed by materials science, building materials science, materials technology, process engineering, and technical chemistry.

For me, the output of one processing step is the input of a subsequent one. The processed objects can be physical samples (specimens) or data samples. For instance, I synthesise an alloy sample, temper, and etch it. After that, I analyse the sample in various measurement setups, creating data sets. Thus, in the context of my data management, physical and data samples are treated the same and both are commonly referred to as “entities”. Similarly, all kinds of processing steps, whether working on physical or data samples are referred to as “activities”. The resulting graph of entities and activities comprises my workflow.

A central interest of Caden is the so-called provenance tracking of samples and data. The central requirement is to store data entities (i.e., both data and metadata) and to store parameters of activities (e.g., temperatures, pressures, simulation parameters) in a structured and traceable manner. In addition, entity links must be created to describe a graph topology. The graph can be very complex and non-linear (i.e., contain branches and bifurcations) with a large number of process steps.

Another challenge for Caden is the cooperation between different institutions. It is quite common for institutions to have their own individual repositories and metadata schemes, often with little overlap to those of other institutions. Consolidation of process steps (i.e. the fragments of the workflow graphs) across institutional boundaries is often difficult, and as of now there is no way to automate this step (e.g. via machine-processable link). One way to solve this issue is the use of a unified research data infrastructure, such as Kadi4Mat or eLabFTW.

A first measure to work towards fulfilling Caden‘s desideratum for a comprehensive compilation of best practices, is an in-depth review of the literature. For this, we will screen the publications already used to determine the state of the art to extract success stories that fit the requirements of archetype Caden, and subsequently extend this effort to non-German publications. Additionally, this screening allows us to identify scientific engineering work groups in Germany whose profiles match Caden and set up structured interviews to gather data on their work practices, use cases and so on. Further interview partners will be found amongst the existing partners of NFDI4Ing.

Close interaction with the materials science and engineering community (research area 43 according to the DFG-nomenclature) ensures that our efforts are communicated into the community and feedback can be collected. The structured interviews will be followed up by workshops with engineers, with the goal of formulating novel best practices for archetype Caden that are then recommended to be deployed by the community. Additional research will identify freely available (not necessarily open-source) RDM tools that are useful for archetype Caden. This includes input from the interviews and workshops. We will compile a report that allows engineers evaluating and comparing the tools.

The questionnaire to collect information on best-practice examples is prepared and will be sent out to the community soon. Several presentations describing the project where held and allowed interacting with the communities gathering new insights in the needs and solutions that are available. Data formats for handling the needs for provenance tracking are discussed and their usage will be evaluated.

The task area is lead by:

For general Information on Caden please contact:

The archetype concept.

Introducing the

archetype:

Doris

Hello, I’m Doris.

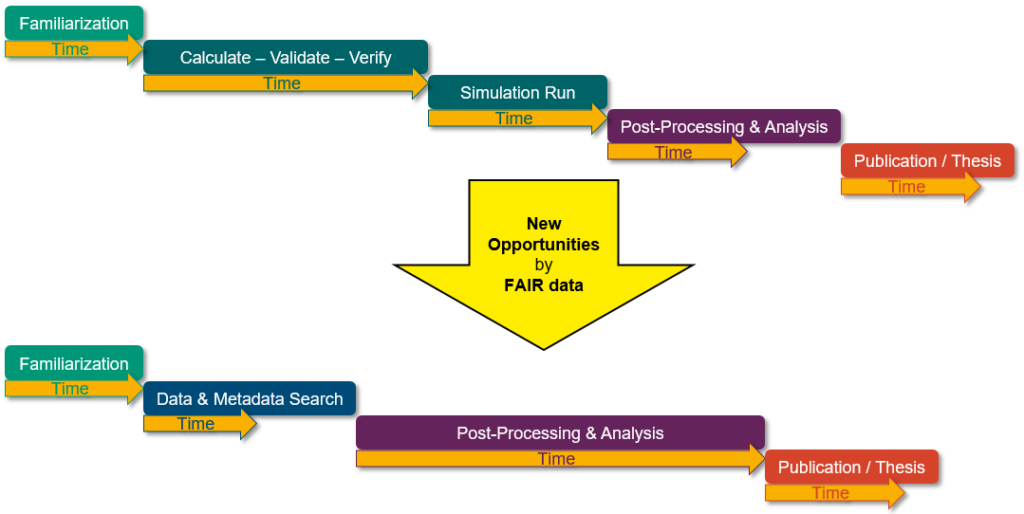

“I’m an engineer conducting and post-processing high-resolution and high-performance measurements and simulations on High-Performance Computing systems (HPC). The data sets I work with are extremely large (hundreds of terabytes or even petabytes) such that they are, by and large, immobile. They are too large to be copied to work stations and the (post-) processing of the experimental and computational data generally is done on HPC systems.

The HPC background mandates tailored, hand-made software, which takes advantage of the high computational performance provided. The data sets accrue in the combustion, energy generation and storage, mobility, fluid dynamics, propulsion, thermodynamics, and civil engineering communities.”

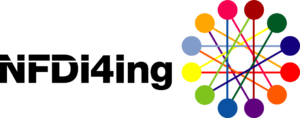

Engineers rely on high-fidelity, high-resolution data to compare their novel modelling approaches in order to verify and validate the proposed method. The data sets from HPMC projects are often used as reference (benchmark) data for modelers and experimentalists. Data accessibility and ensuring reusability for other research groups in order to validate their modelling approaches and their chosen methods can be a valuable progress and an opportunity for new, data based research workflows.

Doris faces the key challenge that data from high-performance measurement and computation (HPMC) applications, which form the base of publications, are not accessible to the public or the research community. In order to gain access to the data as a third-party researcher in the current setup, one has to contact the research group that generated the data and has to figure out a method to access or obtain the data, which are generally way too large to be copied to work stations.

The data neither are documented nor are metadata sets available, as the semantics for HPMC in the engineering sector still need to be adapted to HPMC applications. Common search algorithms and local repositories will be able to find metadata-like keywords in the publications. Data DOIs are supplied to smaller data sets in connection with the publications’ DOI. The multitude of data formats, hardware and software stacks at the different HPC centers further complicate the effective usage of the huge data sets.

The sophisticated HPC background mandates tailored, hand-crafted software, such that a coherent terminology and an interoperable (sub-) ontology have to be established (e.g. in our SIG Metadata & Ontologies and the Base Service S-3). The very specific hardware and software environment at HPC systems makes it time-consuming to get effective access to the data. Therefore, HPC centers need to design new access and file sharing models in order to enable an unhindered opportunity for providing, accessing and post-processing HPMC research data for the community. Software modules simplifying access to the data, and maximizing IO-speeds for the large data sets on the specific platforms will be developed and provided to the community.

Reproducibility of data beyond the life cycle of HPC systems is still an open issue as software container performance is currently too low to be able to reproduce HPC results in an acceptable time frame. Doris will test and compare the performance and feasibility of different containerization tools along with HPC centers.

Showcase software modules for post-processing algorithms will enable other research groups to speed up the time needed to get a start on reusing the single large data sets. Access to the data stored at the HPC centers for non-HPC customers will be realized. The future widespread accessibility of these unique data sets will further spur machine learning, neural network and AI research on these complex engineering problems fostering the effort to acquire knowledge from data.

The Doris team is working on several key objectives to achieve the objectives for a FAIR data management within HPMC research data.

Accessibility and access rights, data security and sovereignty: New user models to reuse very large data sets are being developed and supported by new or adjusted software, for instance to extract and harvest metadata, to post-process simulation or measurement data and to replicate data via containerization tools. Corresponding front ends, interfaces and documentations have to be provided in collaboration with infrastructure participants like LRZ, HLRS and JSC, as well as appropriate access rights, security and sovereignty mechanisms and a (creative commons) license system.

Support for third-party users & community-based training, provision of post-processing algorithms and modules: To increase the acceptance of and the contribution to the developments created in NFDI4Ing, tutorials and workshops will be provided to the community along with the project progress and software development. Existing tools will be evaluated and adjusted to facilitate data provision, access and reuse for primary scientists as well as third-party users. Support and training will be supplied in close coordination with base services and community clusters.

Metadata definitions & terminologies, support to data-generating groups: For HPC users, not only the physical relevant parameters and procedures have to be included in the metadata, but also information on how the data were exactly generated (exact specifications of the numerical method, boundary conditions, initial conditions, etc.). To reach maximum performance on the HPC systems, vendor-specific software on many levels (IO, optimization directives and procedures, memory-specific access, etc.) as well as special programming techniques are used. Together with the Base Service S-3, we will develop metadata standards, terminologies and a sub-ontology, enabling other researchers to find, interpret and reuse the data. In cooperation with pilot users, best-practice guidelines on metadata and terminologies are getting compiled.

Storage & archive for very large data: Along with the involved service and infrastructure providers, methods and capabilities will be developed to store and archive these large data volumes. In accordance with Base Service S-4 “Storage and archive for very large data” front-end solutions will be implemented, providing searchable and accessible metadata information together with persistent URLs and DOIs.

Reproducibility on large-scale high-performance systems: Reproducibility on large-scale and unique high-performance systems is an unsolved problem. Unlike in experiments, a standard is missing that would describe the level of reproducibility, which in turn may depend on the hardware and software platforms used. This conflict will be addressed defining minimum standards for reproducibility issues. Together with the system providers and pilot users, the efficiency of software containers will be evaluated for HPC environments. Best practice guidelines for reproducibility will be developed and published for HPMC users.

Updates and invitations to future workshops and training tools will be communicated through our newsletter and on the NFDI4Ing website. A special domain for training and support tools will be created along with Base Services (particularly in S-6: Community-based training on enabling data driven science).

Software tools will also be provided on the NFDI4Ing website or through GitLab/GitHub. As first results, a metadata crawler for HPC jobfiles and a HPMC-(sub)ontology can be expected.

The task area is lead by:

For general Information on Doris please contact:

The archetype concept.

Introducing the

archetype:

Ellen

Hello, I’m Ellen.

I’m an engineer who analyses complex systems comprising a large set of multidisciplinary interdependencies. Working within the computational sciences, I do not work in labs, but exclusively on computers and computing clusters. I conduct research by performing model-based simulations and optimisation calculations, whereby I often utilise algorithms coming from statistics and computer science.

Inputs to my analyses are the scenarios I investigate. They typically are very data-intensive requiring information from many different disciplines, such as politics, business economy, jurisdiction, physics, chemistry, demography, geography, meteorology, etc. My professional background is typically based in electrical, chemical or energy systems engineering and is often complemented by several aspects of computer science, physics, and economics.

A key characteristic of computational sciences are their enormous data requirements. Information from many heterogeneous disciplines has to be compiled. The satisfaction of information needs usually take up a significant amounts of time and has to be repeated in regular intervals. Often, the required information is not available at all in sufficient spatial, temporal or content resolution, has diverging references regarding the object of investigation or is simply outdated. The more of the required data is not available, the more often scientists are forced to resort to inexact estimates and assumptions, which limit the reliability and legitimacy of their research outcomes.

The aim of this task area is to support engineers in their search for data by facilitating established research methodologies as potential data sources, raising their level of integration and reducing the amount of time required for their application. To this end, in the case of unavailable data sets, scientifically recognised methodological concepts and their software implementations will be made available to generate the missing data. Since neither journal articles nor software codes are suitable to be used as a guide to the implementation of a methodology, conceptual and machine-interpretable workflow descriptions will serve this purpose within the research data landscape.

The major objectives of Ellen are to:

1. support scientists’ data retrieval processes by providing the methodological knowledge and the technical means to generate sought data in case it is not found to be available or well suited.

2. develop a semantic framework to enable the representation and reuse of:

a) scientifically recognized methodological knowledge in form of semantically enriched and machine-interpretable concepts.

b) software implementations, software workflows and data sets.

3. enable the engineering community as well as infrastructure service providers to provide and utilize knowledge-based data storage and retrieval services.

Pursuing the objectives above, the Institute of Energy and Climate Research – Techno-economic Systems Analysis of the Forschungszentrum Jülich (FZJ/IEK-3) and the Leibniz Information Centre for Science and Technology University Library (TIB) lead the activities in the following four measures:

E-1 – Semantic mapping of methodological knowledge: Methodological knowledge will be formalized to semantically rich and machine-interpretable concepts. They will be embeddet into a semantic framework, allowing the retracement and comprehension of complex scientific workflows.

E-2 – Use of methodological knowledge to facilitate data exploration: The methodological concepts will be stored in knowledge graph structures to ensure their findability and accessibility by deploying a concept exploration framework.

E-3 – Connection of data exploration and data generation processes: The automated application of methodological knowledge will be supported by providing web services compiling necessary software and data components. The components will be linked with related information which will jointly be made findable through advanced query algorithms.

E-4 – Community-based validation of data concepts and services: The community will be integrated into the development and validation of the knowledge-based storage and retrieval services. Research findings and best practice guides will be published and disseminated within various community platforms and networks.

Currently the focus in Ellen lies primarily on the creation of a prototype to utilize the ORKG Knowledge Graph structures not only to store and link technology data from energy system analysis, but also to extend them to map information about software models. Publication data has been put into context with energy system model software to pool information on how gaps in datasets can be compensated for by model calculations. First hierarchical template structures have been developed to store metadata from publications, datasets, and energy system analysis software models, thereby serving as the basis for linking information about data generation processes and making it combined discoverable and comparable with each other.

The task area is lead by:

For general information on Ellen please contact:

The archetype concept.

Introducing the

archetype:

Frank

Hello, I’m Frank.

I’m an engineer who works with a range of different and heterogeneous data sources – from the collection of data from test persons up to manufacturing networks. One of the main challenges during my research process is the synchronisation and access management of data that is generated at different sources simultaneously.

My professional background is mostly informed by production engineering, industrial engineering, ergonomics, business engineering, product design and mechanical design, automation engineering, process engineering, civil engineering and transportation science.

Key challenges for the archetype Frank arise from (a) the variety of involved engineering disciplines and (b) from the collaborative nature of working with many participants. These challenges can be broken down further:

a.1) Diversity of raw data: Data differs greatly in its level of structure in contrast to sensory data and software data. Frank must record, store, describe, analyse and publish a great variety of file types.

a.2) Recording methodology: The need for efficient documentation of recording methodologies, description of research environments and study setups are identified as an integral part of the data set.

b.1) Common language: Regarding collaboration with many participants and due to the variety of disciplines, a standardised vocabulary in terms of discipline-specific issues as well as research methodologies is required but not yet established.

b.2) Anonymisation and access: Research in engineering often involves working with corporate and confidential data. Therefore, anonymisation schemes for distributed data are required. Access to stored data must be restricted, protected and managed in a scalable manner.

The archetype Frank is split into separate measures that build on each other:

Measure F-1 – Target process specification: Based on existing definitions of RDM process frameworks, we consolidate, adapt and test those in terms of Frank’s research activities. We use an iterative process development approach in order to elaborate a specific guideline and decision support regarding how to handle RDM for Frank step-by-step.

Measure F-2 – Technological feasibility and decision-making: Whereas measure F-1 defines the underlying needs that arise from current implementation and practicability problems, measure F-2 examines which technologies at hand can be used for FDM.

Measure F-3 – Design concept of an application program interface: With the previously identified implementation problems regarding RDM practice as well as feasible technological options at hand, a general concept of a program interface must be designed. This concept comprises how to combine and link several technologies.

Measure F-4 – Incentivization of an active and interdisciplinary RDM use: For Frank, it is necessary to consider organisational measures, as Frank’s projects often involve many researchers in an interdisciplinary setting. Incentivization is one of the organisational measures, which ensures compliance with the above-developed RDM target process.

In context of Measure F-1, we already have collected various frameworks, all with varying depth and purpose. Those frameworks have been assessed, judged and broken down in order to identify the general requirements of a RDM-framework. Furthermore, laws and guidelines have been taken into account to meet the requirements of a universally valid framework that will guide the user through all standards and specifications issued by institutions such as the DFG.

In addition, the needs of researchers must not be lost sight of. In order to fulfil this goal, an exploratory survey was carried out between October 2020 and January 2021. The survey was distributed, among others, by the German Academic Association for Production Technology (WGP) and the Fraunhofer Group for Production. Besides questioning the status quo of RDM, it was also asked for specific wishes and needs. In addition, several focus group interviews with representatives of the participants and use cases in the task area Frank, e.g. the Cluster of Excellence Internet of Production at RWTH Aachen University, and research groups at TU Munich and TU Berlin, are currently taking place to further investigate the needs of researchers in detail. In these interviews, the participants are being asked about their daily workflows and in how far they already implement RDM. The results of both survey and interviews will be published in summer 2021.

The task area is lead by:

For general information on Frank please contact:

The archetype concept.

Introducing the

archetype:

Golo

Hello, I’m Golo.

I’m an engineer involved in the planning, recording and subsequent analysis of field data. I collect field data from an actual or experimental operation of a cyber physical system. Field data form the basis for optimizing the methodology and parameters of the modelling of a technical system as well as its detailed analysis under real operating conditions along its life cycle. Furthermore, I use field data to prepare data sets for testing system models and enhance learning processes.

To deal with the great variety of field data, I am interested in developing methods and tools for the collection of field data, building concepts and best practice approaches for quality assurance and quality control and enhancing the analysis and reuse of field data. I am an engineer working in fields like production technology, constructive mechanical engineering, systems engineering, robotics and information technology.

Key challenges and objectives

Many environment conditions of a technical system cannot be captured or fully covered. In addition, the great variety of field data, e.g. through different technical systems, requires generalized methods for collection and analysis of data as well as effective concepts to store, archive and access the data itself.

Standardization of these methods with regard to the interoperability and reusability criteria of the FAIR data principle increases the possibilities of reusing the field data. Therefore, a methodological approach is developed and implemented in order to use a digital twin of a physical system as a data representation framework. A digital twin contains actual information about its physical twin and provides a history of states and performances under different operating conditions.

The digital twin concept enables the structured representation of research field data in terms of storage, processing, and (re-)use. A digital twin contains information about the system model, methods of collection and analysis of the field data, as well as the field data itself. Furthermore, automatically generated machine-readable metadata, analyses, and derived information from raw data complete the digital twin concept.

Approach & measures

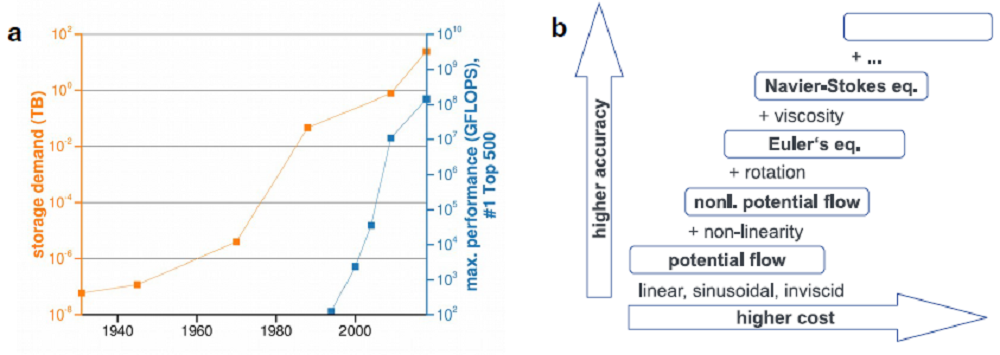

We develop a digital twin concept for organization and processing of research field data iteratively. The concept assumes that the twin has two parts: a digital master and a digital shadow. The digital master is responsible for interpreting and processing research field data. It also includes data analysis methods and data collection methods to facilitate reproduction of results. We determine typical system types and define a minimal set of necessary and sufficient information.

The digital shadow contains research data and the information obtained from its processing. We establish a digital shadow of a technical system. The shadow represents a specific instance of a physical system. By combination of several digital shadows of different instances, the shadow evolves a better understanding of the general characteristics of research objects, fleets or generations of technical systems. We specify metrics for analysis and quality control for different types of field data too.

The concept enables the classification of heterogeneous field data for metadata and label generation for human users and machines as well as automatic generation of data set summaries. In addition, best practices and checklists provide detailed descriptions of the phases and processes of working with field data. We integrate the concept into a process ready to use for an exemplary group of pilot users and identify interfaces for existing tools from RDM.

Results

We develop, test and evaluate the digital twin concept for RDM on the basis of three technical systems. The first system represents road traffic. It deals with methods and tools to represent traffic digitally to improve safety. It provides a safety assessment of the road infrastructure as well as a comparison of structural or infrastructural measures. The second system contains several robotic systems under real environment conditions. Through the collection and processing of field data, reference data sets and benchmarks are created, which enable evaluation of the whole robotic system or parts of it. The third technical system is an optical light system for vehicles. It includes modelling of light-based interactions between vehicles and people to increase comfort and safety functions.

We have introduced a first digital twin concept for RDM. The digital master contains information about the experiment in general, e.g. related publications or tools used for analysis of data. In addition, metadata is provided which describes e.g. the research object or the key measurement objective. Each digital master can hold any number of digital shadows. A digital shadow contains the raw data of a unique experiment. It incorporates the raw data, which is distinguished in primary data and secondary data. The former represents the data necessary to process the key measurement objective while the latter is data which was also recorded but is not relevant for the key measurement objective. Furthermore, metadata is provided, e.g. types of sensors or the experimental design. The concept is shown below in Figure 1.

Contact Information

The task area is lead by:

For general Info on Golo please contact:

The archetype concept.

Introducing the

archetype:

Alex

Hi, I’m Alex.

I’m an engineer that develops and carries out bespoke, one-of-a-kind experiment(s) to investigate a technical system in regard to its process variables. The experiment may be real or virtual, using a custom tailored hardware or software system. Examples of such technical systems are process plants, buildings, prototypes, components, control loops, mechatronic systems, algorithms, mass transport, or interaction interfaces.

I use individual combinations of software, apparatus, methods, and interfaces that vary from case to case. Flexibility is particularly important to me, which makes it all the more important that I can trace the configuration and data flow in my experiments at any time. I am solely responsible for my experiments and need to know exactly what my system is doing. My professional background may be based in production enginering, constructive mechanical engineering, thermofluids, energy systems, systems engineering or construction engineering.

Because Alex pursues highly specialised, bleeding edge research objectives, she has to leverage individual, bespoke one-of-a-kind-setups of equipment, methods and interfaces. Since she typically is solely responsible for a project, Alex wants to know exactly how her system and experiment function. She also commonly needs to adapt the system and experiment to meet her research objective. Alex needs highly flexible software or data models to represent and connect her experiment components, configurations and results. Therefore, Alex often rightfully chooses an iterative or agile approach to reach her research objective.

Alex’s need for flexibility has to be balanced with the necessities of compatibility and reusability. She must be able to trace the data-flow and configuration of her experiment, as well as adapt and repeat it. Alex mostly has to deal with at least a medium volume of data, with no upper limit (gigabytes to terabytes per year and project or more). Therefore Alex’s data rates are in almost all cases too high to manage the generated data manually, even when using document management or version control systems. Additionally, the amount of data is often too large to store or archive the entirety of a generated dataset.

The major objectives of this Task Area are to support Engineers like Alex and provide means to:

1. facilitate re-use and integration of partial solutions (e.g. unified data transfer, increased compatibility, reusability) to improve the traceability of system functions, data flow and configuration. Lower the barrier to manipulate and adapt experiment setups.

2. enable modular, self-documenting software setups (including representations of hardware setups), that automatically generate machine actionable metadata

3. to store and retrieve specific subsets of (meta-)data in medium to large sized collections in an intuitive and performant way to encourage publishing and re-using data collections and facilitate the decoupling of code and data.

Pursuing the objectives above, the Chair of Fluid Systems at TU Darmstadt (TUDa-FST) works together with four participant institutions at TU Darmstadt, TU Clausthal and Saarland University on the following five measures of the task area Alex:

1. Means for integration and synchronization of bespoke system functionality implementations: A-1 “Transmitting data between bespoke partial solutions“

2. Means and background knowledge to design system functionalities modular, interoperable and reusable: A-2 “Modular approach to reusable bespoke solutions”

3. A storage solution that combines performant storage of the primary data and flexible storage of complex metadata: A-3 “Persistent storage of medium to high data volumes with fine grained access”

4. An intuitive and unified basis to interact with data and metadata centred around software modules and hardware as well as transmitted data: A-4 “Core metadata model”

5. Means and background knowledge to reconstruct incomplete metadata by using information from textual sources: A-5 “Retroactive metadata generation for legacy data”

During the initialisation phase of the task area, the vision, overarching concepts and contents have been refined. Concrete use-cases as well as specific topics and contributions corresponding to the measures have been identified. The focus in this early phase has been on the research, assessment and validation of state of the art technologies and methods applicable for the pursued use-cases and their contributions to the needs of engineers like Alex.

The task area is lead by:

Members of the Alex-Team are:

Maxi Mustermann

m.mustermann@tu-clausthal.de

+49 5323 98765432

Welcome to NFDI4Ing – the National Research Data Infrastructure for Engineering Sciences!

Our Mission

As part of the German National Research Data Infrastructure (NFDI), the consortium aims to develop, disseminate, standardise and provide methods and services to make engineering research data FAIR: data has to be findable, accessible, interoperable, and re-usable.

Engineering sciences play a key role in developing solutions for the technical, environmental, and economic challenges imposed by the demands of our modern society. The associated research processes as well as the solutions themselves can only be sustainable if it is accompanied by proper research data management (RDM) that implements the FAIR data principles first outlined in 2016. NFDI4Ing brings together the engineering communities to work towards that goal.

Engineering communities share a number of similarities: they typically show a high affinity and ability to develop and adapt IT systems, familiarity with standardisation (e.g. industry standards), know-how in quality management, and last but not least an established practice of systematic approaches in big projects with many stakeholders. However, the engineering sciences are also highly specialised, with a large number of (sub-)communities that range from architecture and civil engineering all the way to process engineering and thermal engineering. Depending on the research subjects, engineers work with a diverse set of types, objects, amount, and quality of data. Not surprisingly, this results in a wide variety of engineering research profiles and often highly specialised approaches towards research data management.

Our mission as NFDI4Ing is to develop research data management solutions that benefit the engineering community at large.

Our Goals

From its inception, NFDI4Ing focused on eight objectives that were identified in close collaboration with the engineering communities. Beginning back in 2017, we conducted surveys, workshops and semi-standardised face-to-face interviews with representatives from engineering research communities. The results provided a broad overview of the then current state and needs regarding RDM in engineering sciences. From this basis, we developed the eight key objectives shown below.

Retrace & Reproduce

Scientists of all disciplines are able to retrace or reproduce all steps of engineering research processes. This ensures the trustworthiness of published results, prevents redundancies, and contributes to social acceptance.

Research Software

Scientists of all disciplines are able to retrace or reproduce all steps of engineering research processes. This ensures the trustworthiness of published results, prevents redundancies, and contributes to social acceptance.

Auxiliary Information

Scientists of all disciplines are able to retrace or reproduce all steps of engineering research processes. This ensures the trustworthiness of published results, prevents redundancies, and contributes to social acceptance.

Sharing & Integration

Scientists of all disciplines are able to retrace or reproduce all steps of engineering research processes. This ensures the trustworthiness of published results, prevents redundancies, and contributes to social acceptance.

Authorised Access

Scientists of all disciplines are able to retrace or reproduce all steps of engineering research processes. This ensures the trustworthiness of published results, prevents redundancies, and contributes to social acceptance.

Machine Readability

Scientists of all disciplines are able to retrace or reproduce all steps of engineering research processes. This ensures the trustworthiness of published results, prevents redundancies, and contributes to social acceptance.

Data Literacy

Engineers profit from an improved data- and software-related education (data literacy) and available domain and application specific best practices.

Data Publications

Our Structure

The objectives above take into account perspectives of engineers as well as infrastructure providers and instructed the structure of NFDI4Ing. As a project, NFDI4Ing is sub-divided into different task areas. At the centre are the seven task areas focused on addressing the requirements of our Archetypes. The archetypes are methodically derived attempts to cluster typical engineering research methods and workflows and classify corresponding challenges in RDM. The archetype task areas are flanked by our Community Clusters that connect them to the engineering communities, and the Base Services that further develop and scale solutions provided by the archetypes and supply specific services and tools for internal and external partners.

To illustrate the purpose of each task area, we commonly draw on similarities to the structure of a company. The archetypes are roughly similar to the R&D department of an enterprise, tasked with innovating and developing solutions to customer requirements. The Base Services correspond to manufacturing and production, picking up the raw solutions from the archetypes and bringing them to market. The Community Clusters represent marketing and public relations, being closely connected to all relevant stakeholders and acting as go-between between the consortium and its environment.

You can see more details the individual task areas in the diagram below.

Overview of the structure and work programme of NFDI4Ing