The NFDI4Ing task area DORIS designs transferable research-data concepts and software infrastructure for data from high performance measurement and computing (HPMC). The data sets from HPMC projects are often used as reference (benchmark) data for models, simulations and experiments. With the current state of the art in the engineering HPMC community, data from HPMC are at most partially FAIR. Our goal is to identify or develop best-practice tools and to advise HPMC-researchers regarding FAIR research-data management, focusing on users at the (national) tier 1 computing centers (HLRS, JSC, LRZ). We want to enable and encourage researches to provide data sets to the community as well as reuse existing data, and thereby develop new user models to reuse very large data sets. |

Approaches and results

To verify and optimize our developments and use cases, test and regular projects at LRZ (SuperMUC-NG), HLRS (HAWK/Vulcan) and JSC (JUWELS) have been requested successfully and are currently in process. DORIS succeeded in incorporating inter-institutional stakeholders from engineering research groups, high-performance computing centers and university libraries to improve the FAIR data principles within the HPMC domain:

F – Findability

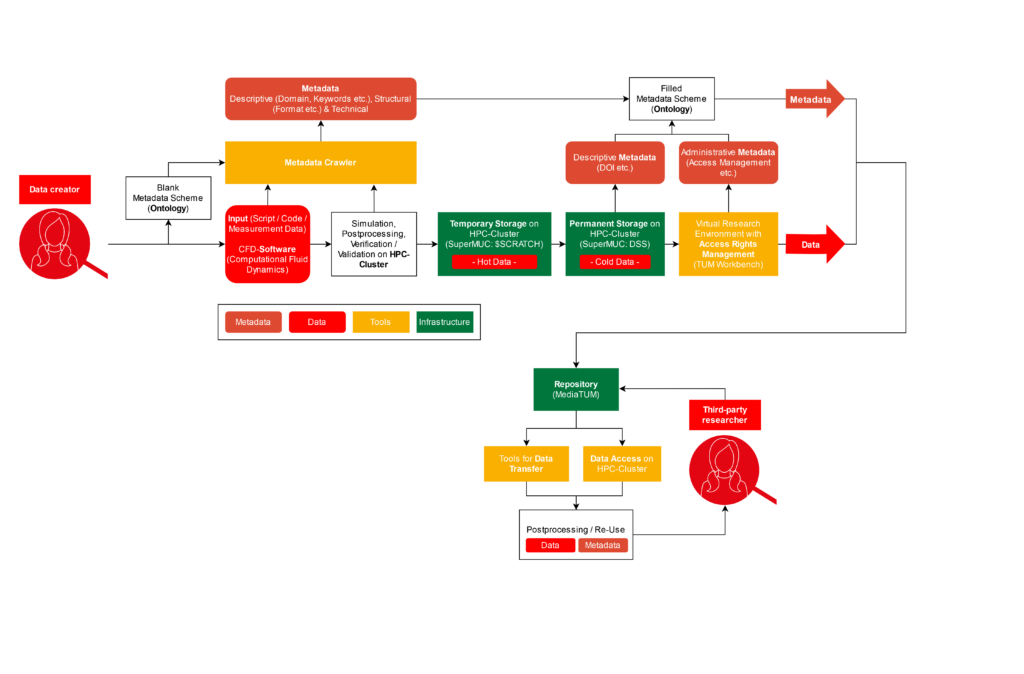

The data are mostly archived on tape at HPC centers in the personal account of the data generator. Often, this data is neither documented nor equipped with adequate metadata. To make data findable, a searchable and indexable local storage to front-end interface has been provided (TUM Workbench ↔ LRZ DSS storage) and connected to a repository (mediaTUM). To support the scientific community and to gain further improvement through testing and feedback, a users’ guide has been published.

As human and machine-readable metadata are essential for discovery of datasets, DORIS recently released a metadata crawler. The crawler is able to naturally create ontology-conforming metadata fields, accompanying research, and allows automated extraction of metadata from simulations or experiments conducted on HPMC systems.

A – Accessibility

The established storage to front-end interface is accessible through the local virtual research environment (see: F – Findability); an individual access management system can be implemented, which is an important milestone to make data available while maintaining data security and sovereignty. The current legal foundations regarding protection of legitimate expectations at HPC centers have been outlined in an (nfdi4ing-) internal note. Additionally, a generic template for a HPMC data management plan has been published.

Because of the high storage demands (hundreds of TB or even PB), HPMC data are currently often immobile and too large to be copied to local workstations. DORIS has been evaluating transfer tools to share large datasets within the participating research institutions and HPC facilities. We are testing software that simplifies access to data (Grid-FTP, Globus e.g.). Due to new data sharing endpoints for pilot users at RWTH and TUM, a large HPC dataset was made accessible and interoperable for both ends. Due to the low performance or lacking interoperability of transfer tools between supercomputing facilities, DORIS will investigate new sharing possibilities and direct interactive access to HPMC data (e.g. via LRZ Compute Cloud).

I – Interoperability

The use of a formal and broadly applicable language and common terminology is needed for (meta)data to interoperate with applications or workflows for analysis, storage and processing. Due do the absence of a thorough semantics to describe engineering sciences in HPC, DORIS aims at defining and disseminating metadata standards for HPMC environments and is developing a comprehensive HPMC ontology, following up on the recently released NFDI4Ing ontology. Our goal is the automatic retrieval of metadata from all processing steps by a newly developed metadata crawler (see F – Findability). The functionality of this crawler has to be tested and an interaction or merge with the DataCite schema used by LRZ on SuperMUC-NG is a possible further enhancement.

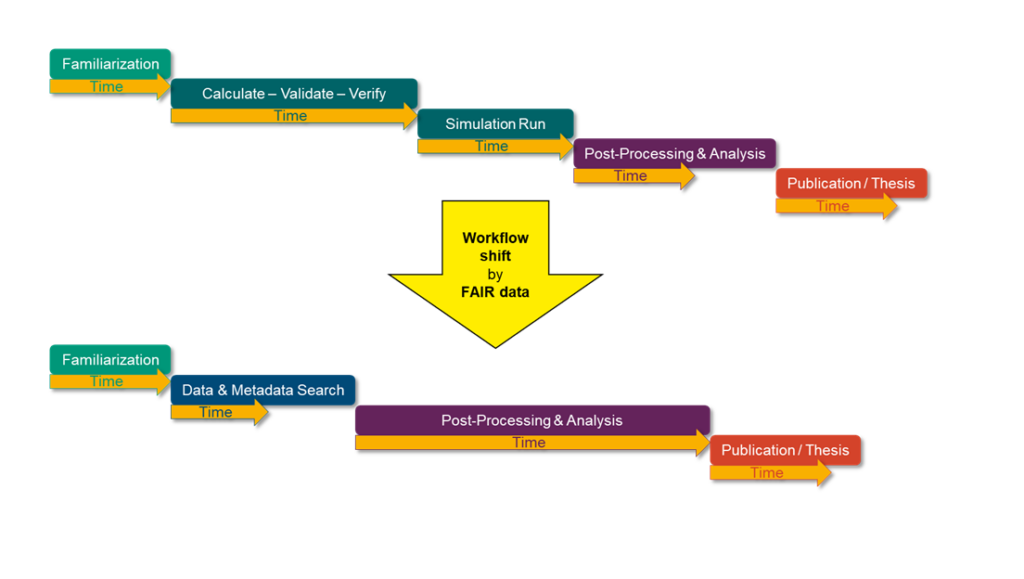

R – Reusability

The further processing of archetypal research data generated in the HPMC community usually is executed on HPC systems. This complicates the reuse of data as computing time at HPC systems is required to efficiently reuse, reproduce or post-process corresponding data. To evaluate the feasibility of containers/dockers for reproducibility for HPC systems (Charliecloud, Singularity), test runs on SuperMUC/LRZ, Vulcan/HLRS and JUWELS/JSC have been conducted. According to our current results, there are many unsolved issues concerning reusability and reproducibility using containerization software. The results and performance differ from one facility to another, depending on hardware and installed software tools. Up to now, no viable reproduction of HPMC-data with the necessary high-performance of thousands or even 100,000s of CPU cores could be achieved, so further test runs with increased computing time will be conducted. We plan to compare the workflow of CFD simulations – from compilation to post-processing – in their vanilla and in a containerized form. Therein, we will investigate the parallel-performance differences – for computation and large file I/O – between the vanilla and the containerized version of the application. The results will be published within the context of NFDI4Ing. DORIS will develop and share best-practice recommendations for reproducibility of simulations on HPC systems.

Resume

The focus of DORIS lies on a holistic analysis of engineering HPC data workflows. New concepts for data storage, findability, access and transfer are evaluated, a viable concept with the local infrastructure (TUM, LRZ) has been implemented. It enables findability through rich, interoperable and searchable metadata within a repository, as well as accessibility through storage infrastructure and an individual access-rights management. By offering efficient transfer tools or direct data access and post-processing options at the HPC cluster, we facilitate the interoperability and reusability of HPMC data.

Outreach and participation

The full benefit of DORIS lies in the usage of previously inaccessible data through third-party research groups. To transfer the gained knowledge and outcome, and to promote preexisting or newly developed tools, DORIS regularly provides community-based training and workshops. In this context, we cordially invite all interested users to the upcoming DORIS (online) workshop on April 06, 2022. The workshop is addressed at users at the (national) tier 1 computing centers, researchers with large, immobile data, and personnel in research-data management. Further information, registration and updates:

https://www.epc.ed.tum.de/en/aer/news-events/news/article/workshop/

To inspire future scientists for RDM, and to enable them to apply their RDM skills within undergraduate studies, a new module on RDM in engineering sciences at TU Munich has been developed. The lecture will premiere in the summer semester 2022 with participation of internal and external lecturers and could modularly be expanded to other universities or research domains.

Contact

DORIS Newsletter: https://lists.tu-darmstadt.de/mailman/listinfo/nfdi4ing_taskarea_doris

General inquiries: info-doris@nfdi4ing.de

Website: https://nfdi4ing.de/archetypes/doris/

Spokesperson: Prof. Dr.-Ing. Christian Stemmer | christian.stemmer@tum.de

Participants: TU Munich (TUM), High-Performance Computing Center Stuttgart (HLRS), Leibniz Supercomputing Centre (LRZ), RWTH Aachen

B. Farnbacher, N. Hoppe, V. Sdralia, Ch. Stemmer