Alex is all about bespoke, one-of-a-kind physical or virtual experiments. Robust data management before and after the experiment is essential to make research FAIR. Get to know the newest approaches currently under development in Alex!

The NFDI4Ing task area Alex addresses data management challenges faced by engineers that develop and carry out bespoke, one-of-a-kind physical or virtual experiments. Five institutes participate in Alex: The Chair of Fluid Systems (TUDA), Reactive Flows and Diagnostics (TUDA), Simulation of Reactive Thermo-Fluid Systems(TUDA) as well as the Research Center Energy Storage Technologies at TU Clausthal and the Lab for Measurement Technology at the University of Saarland. In the following, we provide an insight into our current activities.

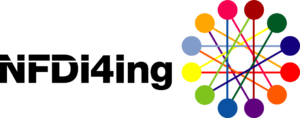

Connecting Plots and Data: PlotID

The language of engineering science are plots and diagrams of (virtual or physical) experiments. When looking at these diagrams, the data basis and its processing is rarely apparent or specified. As a first step towards FAIR plots and diagrams, a concept for connecting research data, associated scripts and the resulting plot was proposed and presented on the NFDI4Ing conference by researchers from the Chair of Fluid Systems at TU Darmstadt. This framework is called PlotID. PlotID works in two steps. In the first step the plot is labelled with an ID and in a second step the research data is packed. With the ID, you can reconnect and share the specific data of this diagram.

A first MATLAB based reference implementation is currently in development and a pre-release is available for public testing.

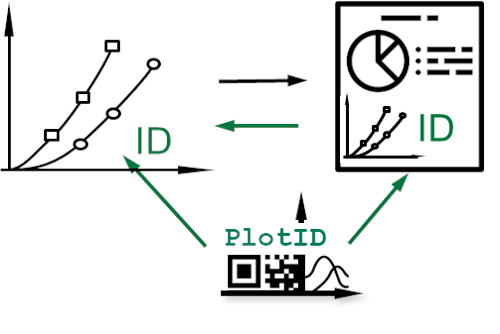

From Data Sheets to Machine-Readable Characteristic and Impact Sheets (CIS)

Regardless whether a model or a physical test rig is built for a simulation or experiment respectively, it is necessary to find and access data on the characteristics of components, be they sensors, actors or passive components in the setup. Within Alex a concept has been developed for creating a machine-readable characteristic and impact sheet (CIS). As an alternative to conventional data sheets, CIS use controlled vocabularies from industry and W3C standards for creating a semantic information model of components adapted to individual applications. They can be serialized in machine-readable formats, e.g., RDF/XML, JSON-LD, Turtle. This allows integration into the software architecture of test rigs or automated simulation runs. We presented the concept on a poster at the annual meeting of Process and Plant Engineering Community and a conference paper is under review at the 13th International Fluid Power Conference Aachen.

Electronic Lab Books for Bespoke Experiments

FAIR principles require a good data management practice in the lab. At the Research Center Energy Storage Technologies of Clausthal University of Technology, experiments for battery tests are evaluated. After establishing an inventory of software and data storage formats, means of standardisation are investigated on the route to an automated and machine readable data storage. As a first step, electronic lab books are introduced.

An electronic lab book allows curating all information on experiments in one place, just like a paper lab book. However, an electronic lab book adds advantages for collaborative work. All work steps are documented using a data record, time stamps guarantee immutability, and all data are digitally searchable across different experiments. eLabFTW is an open-source electronic lab book tool in blank page format: there are no specifications regarding structure or formats. This flexibility is particularly interesting for documentation, storage, and evaluation of data used by and created in bespoke experiments. Electronic lab books can be considered a first step towards an automated and machine-readable data storage.

Reporting via GitLab Pages

One of the main purposes of Alex and NFDI4Ing in general is to bring sophisticated research data management approaches closer to researchers in the engineering sciences. To lead by example, Alex is documenting its progress using a GitLab Pages website in addition to the usual internal progress reports. Participants of Alex can use a simple markdown template to write about their status or present results such as new tools, technology reviews, milestones, publications or workshop summaries. These are then published on the GitLab Pages where they can be reached by anyone interested in novel approaches in research data management. The platform will be accessible in the course of February.

New Data Center as Workflow Test Bed

At the Institute for Reactive Flows and Diagnostics, a new data center (2 petabyte raw storage, high-performance cluster) was built and funded by the TU Darmstadt. In addition to RDM-compliant storage, backup and archiving of the institute’s data, this data center also serves as a test bed and benchmark environment for a secure research data workflow and the possible as well as necessary technical boundary conditions. Here, the focus is particularly on the acceptance by the user, data integrity and data security. Experiments at a generic hot gas test bench used in the SFB-TRR 150 are serving as benchmark data set for testing and optimization of the RDM workflow. This includes research on self-documenting test rig software, evaluating new measurement data evaluation strategies, and publication of data sets in scientific journals. The new RSM data center environment should support the institute members along the whole RDM-path and will be further optimized and adapted based on the research results in the NFDI4Ing project.

Automatic metadata annotation in bespoke experiments

The annotation of multidimensional data with their metadata and custom labels is often user and application specific and considered to take a lot of effort. Using the application of a gas mixing system for characterization of gas sensors systems for indoor air quality monitoring, the Lab for Measurement Technology is pursuing an automated integration of all information necessary for interpretation and long-term use of the experiment into the metadata of the measurement file. The documentation of all relevant parameters, as well as the automatic provision on a central platform is essential. The file must be accessible and interpretable by humans and machines on variable access scales. For this purpose, the Lab of Measurement Technology evaluates multiple open-source tools as well as complete solutions on their interoperability and user-friendliness.

M. Leštáková, J. Lemmer, K. Logan, L. Eisner, S. Wagner, C.Schnur, H. Lensch