With our benchmark suite we investigated object storages. Based on the results, we compiled usage guidelines to improve transfer speeds and continuously monitor connection quality.

Research data is commonly written once when generated, rarely changed thereafter, and potentially read multiple times for different analyses. Object storage is a well-suited means of storing data with this usage pattern, and research data in particular, due to its built-in metadata, versioning, and scalability.

Goals and implementation

In the German research landscape several institutions have their own solutions for object storage. These are generally interoperable, but due to differences between these systems and the connections between them, transfer speeds inevitably differ. We set out to create a benchmark suite to investigate and quantify these differences in transfer speeds. With the results we expected to be able to improve transfer speeds with further investigations and determine which locations are most suitable for given data based on who will likely access it. By regularly running the benchmarks we can also quickly identify potential problems with storages or connections as they arise. Additionally, we wanted to see what impact the makeup of research data has for these systems and the tools that enable transfers between them.

Based on the results of our benchmark suite, we compiled a set of recommendations on how best to arrange data and transfer it between different storage locations. These recommendations enable engineering scientists to transfer their research data sets more efficiently.

The developed benchmark suite consists of Bash scripts that set up and execute the benchmarks using one of the two implemented tools, MinIO Client and rclone, two common command-line programs to manage cloud storage. The scripts can be executed on any system with a Bash shell and both tools are available for Linux, Windows, and macOS. The results are visualized with Python scripts in JupyterLab.

Analysis of results

Based on a range of benchmarks that we conducted with different test data, we have made three key observations.

First, of the benchmarked tools we recommend MinIO which has proven equal or superior to rclone across the board in terms of transfer speed.

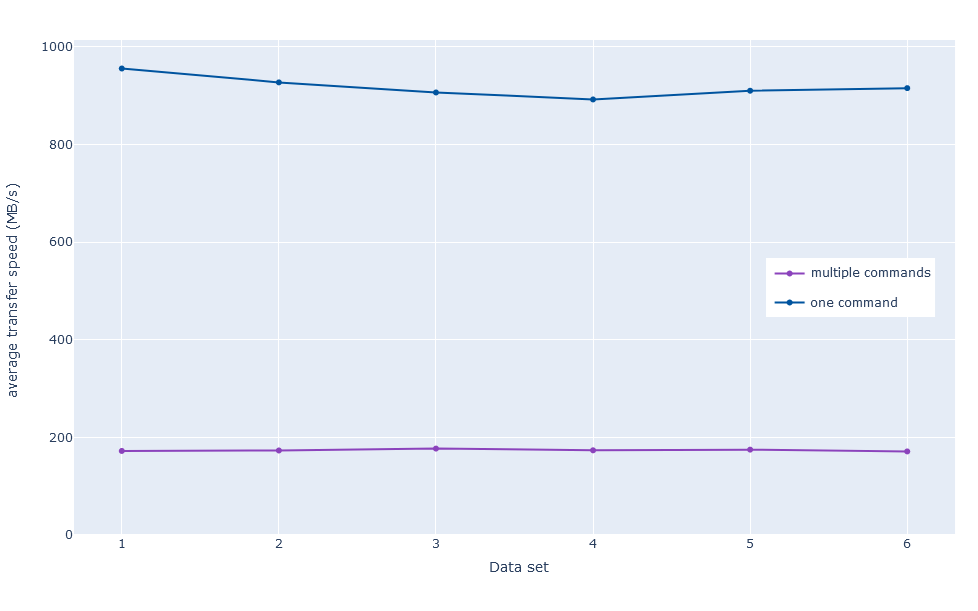

Second, the way the tools are used can have a great effect on transfer speeds. Data should always be transferred with a single command. This allows the tools to make full use of their parallelization. If data sets are in different folders, but will be uploaded to the same location, combining these transfers into one can save a lot of time.

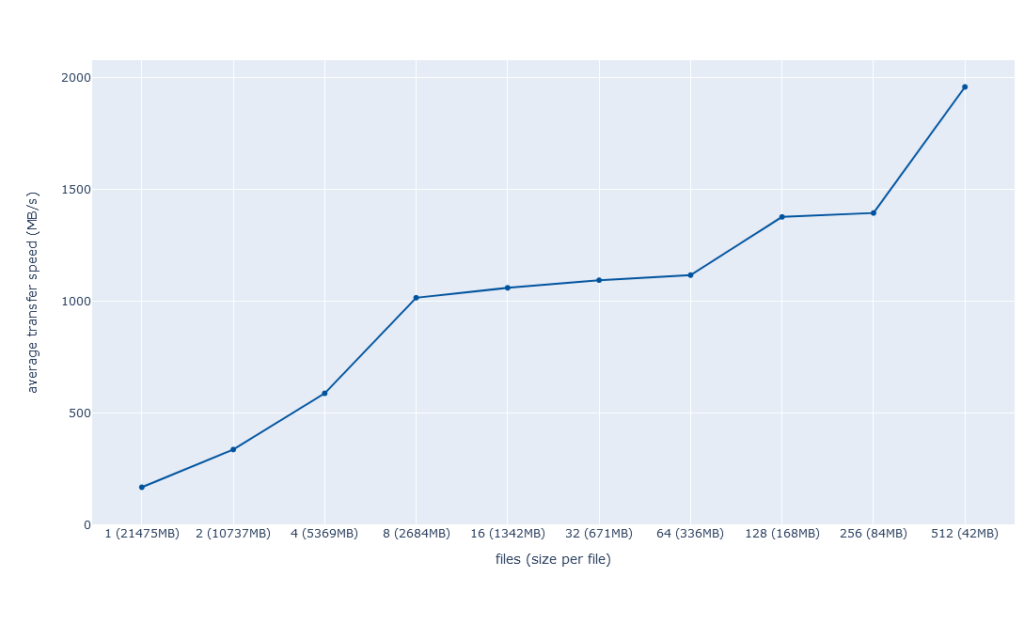

Third, the way in which data is arranged can have a similar impact on transfer speeds. Making full use of this requires some simple processing of the data set, usually in the form of packaging it as an archive file. This is to avoid a huge number of very small files and big differences in size between the biggest and all other files. The benefit of this processing must be considered on a case-by-case basis, based on whether this is worth it for the data set’s size and on whether data is simply archived or expected to be downloaded somewhat regularly.

Putting the results into numbers, using MinIO over rclone usually led to two to three times greater speeds. Transferring data in one command with MinIO yielded a reliable increase roughly between two- and fivefold. Outliers that presumably resulted from fortunate timing on a live system with other users suggest that more may be possible. The effect of arranging data optimally (i.e. having many files of the same size above a certain threshold) was even better than that, allowing in some cases to reach the physical limit of what the connection was capable of.

Fabian Dünzer